LeetCode’s Java ecosystem is evolving beyond mere algorithmic correctness, with a growing emphasis on code quality metrics such as readability, maintainability, and performance profiling. Practitioners are advancing from writing bare-bones solutions to refining their work through rigorous complexity analysis and structured refactoring.

LeetCode-in-Java, a prominent community resource hosting over 300 Java-based interview questions, lists the time complexity and space usage for each problem, aiding developers in benchmarking their approaches. But coding interviews today demand more than just a correct answer—they require holistic software design skills. A Medium feature on refactoring emphasises this shift, urging developers to assess time‑space complexity and then improve code clarity and structure post-solution.

Industry voices critique LeetCode’s environment for fostering overly optimized single‑run code that fails to reflect real‑world requirements. On Reddit, one experienced engineer noted many “optimal” solutions are impractical for evolving specifications, urging a focus on maintainability over micro‑optimisation. As a result, echoing best practices from Java development, many practitioners now systematically refactor LeetCode solutions—using meaningful naming, extracting methods, avoiding magic constants, and de‑duplicating code, in line with recognized Java refactoring strategies.

Empirical software‑engineering research reinforces the importance of such practices. A 2022 study tracking 785,000 Java methods found that maintaining routines under 24 lines significantly reduced maintenance effort. Similarly, classes named with suffixes like “Utils” or “Handler” were shown to harbour disproportionately high complexity—underlining the need for careful class design in production code. While LeetCode solutions may be small in scale, imbuing them with production‑grade discipline reflects professional development standards.

When evaluating complexity, runtime benchmarks on LeetCode can mislead. As one software engineer commented via LinkedIn, the same code submission often records drastically different performance results—sometimes varying from top 9% to bottom 49%—making single-run statistics unreliable. The consensus is that complexity analysis should rely on theoretical Big‑O calculations and profiling tools, rather than on platform‑dependent runtime rankings.

Leading Java‑centric LeetCode repositories, such as Stas Levin’s “LeetCode‑refactored,” offer annotated solutions that juxtapose common community code with clean‑code versions enhanced for readability and maintainability. These repositories emphasise abstractions that separate concerns and reflect developers’ mental models—crucial traits for collaborative engineering.

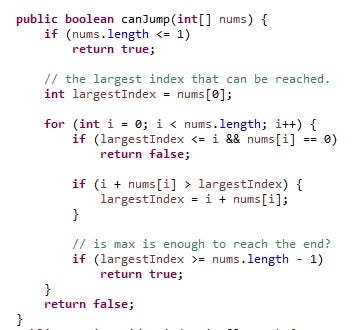

Comparing different practitioners’ approaches highlights notable contrasts. Algorithm‑centric contributions typically deliver maximum efficiency but often sacrifice clarity. By contrast, clean‑code variants—extracted into discrete helper methods, elegantly named, and trimmed of magic numbers—raise maintainability, occasionally at the cost of some performance headroom. Experts recommend a balanced trade‑off: start with a correct algorithm, validate its complexity, then refactor iteratively, keeping an eye on time/space costs.

Several improvement areas still persist. Developers often overlook test coverage when practising LeetCode, missing opportunities to adopt red‑green‑refactor cycles anchored in unit tests—best practice in professional Java work. In addition, object‑oriented design in LeetCode is rarely leveraged: functions are frequently static, bypassing opportunities to modularise logic into cohesive classes. Shared repository conventions like “Utils” classes frequently miss cohesion standards, a warning echoed by empirical studies.

Despite limitations in performance metrics, industry feedback indicates LeetCode remains valuable for demonstrating disciplined problem solving. Profiles of hiring managers show that, while they value clean and explainable code, technical interviews still often triage via algorithmic performance under time pressure, so balancing both dimensions is essential.