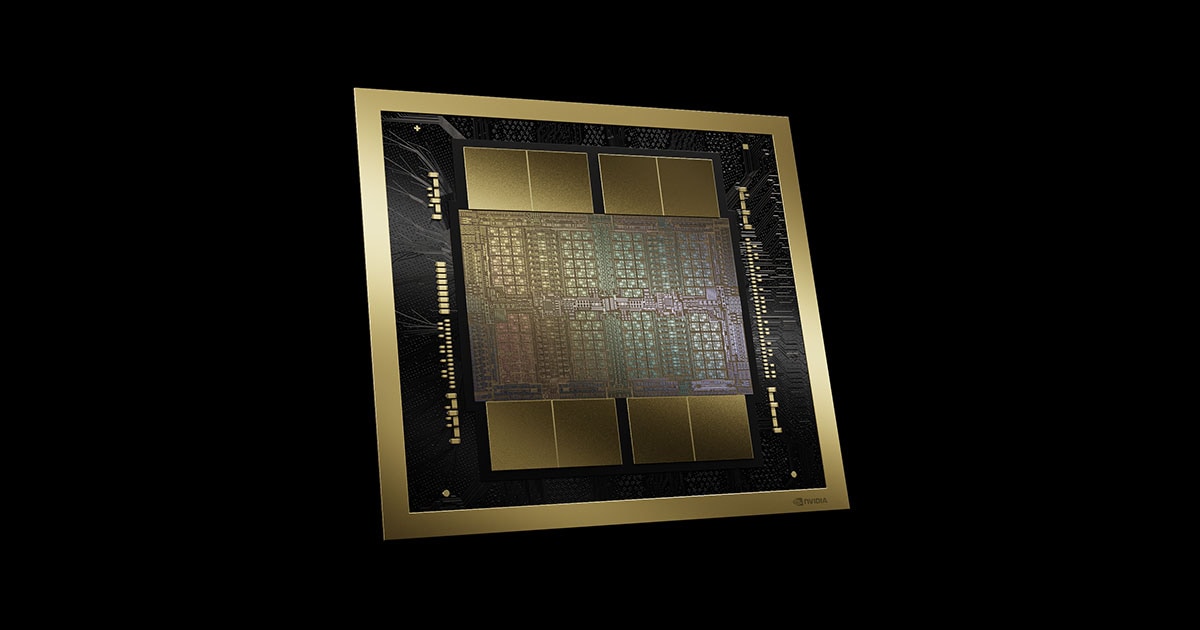

Nvidia is set to begin production of a new, lower‑spec Blackwell‑architecture AI chip for the Chinese market as early as June, aimed at complying with US export controls while maintaining its presence in the nation’s booming data centre sector. Priced between $6,500 and $8,000—significantly cheaper than the $10,000–$12,000 H20—the chip trades performance for accessibility under current regulatory constraints.

The streamlined variant is based on the RTX Pro 6000D, utilising conventional GDDR7 memory instead of high‑bandwidth memory and eschewing TSMC’s advanced CoWoS packaging to stay within US‑mandated memory bandwidth limits of 1.7–1.8 TB/s. Industry analysts note this is markedly below the 4 TB/s capability of the H20.

Yet Nvidia is not stopping there. As part of a dual‑chip strategy, it is reportedly developing a second Blackwell model scheduled to enter production by September. The specifications remain undisclosed but are expected to similarly align with US export regulations.

China accounts for around 13 per cent of Nvidia’s revenue, a slice that has shrunk sharply from a pre‑2022 peak due to export restrictions, which caused market share to decline from roughly 95 per cent to near 50 per cent today. A ban on the H20 in April prompted the company to write off about $5.5 billion in inventory and forgo an estimated $15 billion in sales.

CEO Jensen Huang has acknowledged the company’s “limited” options, stating that until a compliant design is approved by US authorities, Nvidia remains locked out of China’s $50 billion data centre market. Without it, his comments suggest many customers may migrate to domestic alternatives like Huawei’s Ascend 910B.

Domestic competition in China has intensified. Huawei’s Ascend 910B and others have gained momentum, closing the gap to Nvidia’s downgraded models within one to two years, according to semiconductor analysts. Nvidia counters this with its CUDA programming ecosystem — a key competitive advantage. With CUDA deeply integrated into AI development workflows globally, it may anchor developer loyalty even as hardware capabilities shift.

Further complicating the picture are whispers of a third chip internally known as “B30” or “B40”, which appears designed to operate in clusters and align with new export rules by focusing restrictions on individual chip rather than entire systems. Reports indicate interest from China’s leading tech companies—ByteDance, Alibaba and Tencent—with ambitions to produce more than 1 million units this year. Nvidia has not directly confirmed the chip, and one Chinese supplier, ZJK Industrial, briefly announced production ramp‑up for the B40 before retracting the statement, triggering increased market speculation.

US officials have shown concern that widespread availability of such chips—even within controlled clusters—could bolster China’s capabilities in foundational model training and inference tasks. Commerce Department Under‑Secretary Jeffrey Kessler recently told lawmakers that AI chip smuggling “is happening”, echoing longstanding export fears. Nvidia’s Huang maintains there is “no evidence” of diversion, but a consistent black‑market narrative continues to shadow the sector.

Chinese firms have outlined plans to deploy thousands of H100 and H200 chips in new data centres, particularly in western provinces such as Xinjiang and Qinghai, where abundant renewable power and cool climates offer logistical advantages. Target installations indicate upwards of 115,000 such processors in aggregated plans—most of which remain aspirational due to export prohibitions and logistical challenges.

Analysts suggest that while Nvidia’s tiered Blackwell chips may temporarily appease China’s AI infrastructure demands, the deeper impact will depend on regulatory outcomes and the strength of the CUDA ecosystem. If export restrictions ease, Nvidia stands to regain momentum; if they tighten, domestic chipmakers like Huawei will likely secure a larger foothold.

This unfolding scenario underscores a shift in semiconductor geopolitics: global tech leaders are adapting designs to fragmented access zones while local players race to fill the vacuum. The episode illustrates how export policy, market demand and strategic software assets intersect in shaping tomorrow’s AI hardware landscape.